It is now nearly seven years since the in-press release of the notorious “Lewandowsky, Oberauer & Gignac – NASA faked the moon landing:Therefore (Climate) Science is a Hoax: An Anatomy of the Motivated Rejection of Science” and the 26 March is the sixth anniversary of its final publication in amended form. Last month I was prompted to look again at the underlying survey data a short article at Medium by Jose Duarte. I fully agree with the differences in between the “published” and “extended” data files, now both archived on a Bristol University server, and have found some others. However, the subject of post is very different.

Main Thesis

Based on the “Moon Hoax” survey data, when confronted with a unknown conspiracy theory, the more sceptical a person is of climate “science” the more likely they are to mildly disagree with the conspiracy, whilst the more accepting a person is of “climate science” the more likely they are to strongly reject the conspiracy. Climate sceptics tend to be more sceptical of statements new to them, whilst those believing in climate science to roundly reject such statements. Presented with a conspiracy theory that at least a strong minority agree with, then the degree of acceptance shows that sceptics tend to be more conservative or neo-liberal, whilst climate alarmists are more to socialist / progressive / (US) liberal.

The Iraq War Question

One of the first things I found in the “extended” file on the Bristol University server was responses to the missing Iraq question, located at the start of the conspiracy theory questions. The question was

The Iraq War in 2003 was launched for reasons other than to remove WMD from Iraq.

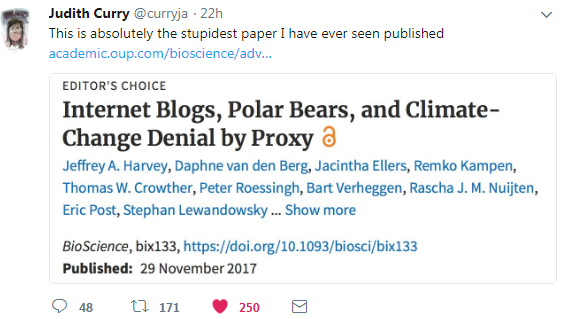

To look at the results, like in my September 2012 analysis, I produced a pivot table of the Iraq War responses against the average of the four “CO2 Science” questions. I did the same for the 14 conspiracy theory questions.

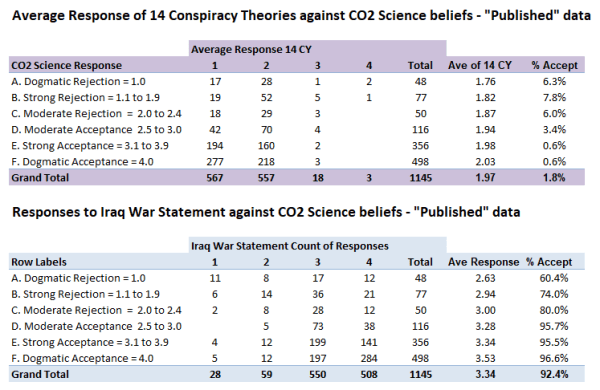

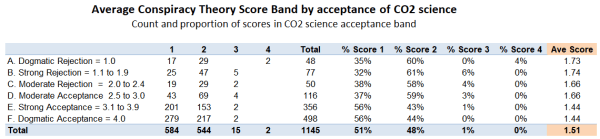

Figure 1 : Comparison of responses to the 14 Conspiracy Theory statements and the IraqNot4WMD with the average response band to the four CO2 Science questions. Note that the “average response” is the raw average response, and not the average of the response bands. For instance if a response had 8 “1” and 6 “2” the raw average response would be 1.43 and the response band would be “1”.

The first thing to note from figure 1 is that the vast majority all responses on average reject all 14 conspiracy theories. The conclusion from these figures is that, with few exceptions, those who reject climate science (skeptics/deniers/contrarians) also reject conspiracy theories, just like those who accept climate “science”. Two notable exceptions are responses 860 and 889 who answered 1 to all four CO2 Science questions and who strongly agreed with nearly all the conspiracy theories. Whether scam responses, or clearly held beliefs, they are outliers in the data sets.

Also of note is that the average response score for both the 14 conspiracy theories and the Iraq War question increases with increasing acceptance of climate science. Thus average responses suggests the opposite to what the paper claims.

Why the difference?

The average score suggests the opposite of far more sophisticated findings of the published paper. To understand more we need to look at the average response counts for the 14 conspiracy theories in more detail.

Figure 2: The count of average 14 conspiracy theory scores and the percentage of total responses, split by conspiracy theory band and by acceptance of CO2 science

The average score this time is on the conspiracy theory bands. It now gives the opposite of the conclusion in Figure 1. This time conspiracy theory average score decreases with increasing acceptance of CO2 Science.

The detail suggests why this is the case. % Score 1 – the strong rejection of conspiracy theories – there is an increase in percentage of respondents with increase in belief in climate change. But for score 2 it is the reverse direction. This should be an interesting result in itself. The dogmatic rejection of conspiracy theories appears to be related to belief in climate alarmism, but a less strong rejection – or a more sceptical stance – appears to be related to degree of climate scepticism. I have produced a similar table for the Iraq War question.

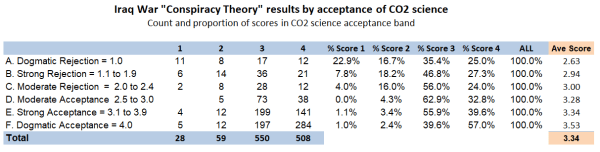

Figure 3: Count of responses to “The Iraq War in 2003 was launched for reasons other than to remove WMD from Iraq” by beliefs in climate science.

An interesting point about the IraqNot4WMD is acceptance by the vast majority, not rejection like for the other conspiracy theories. Strong acceptance of “The Iraq War in 2003 was launched for reasons other than to remove WMD from Iraq” appears to be related to belief in CO2 Science, but lesser acceptance is strongest with those of more moderate views. Less than 10% of responses rejected the statement. Amongst this small minority of responses, disagreement with the statement is related to the rejection of CO2 Science.

Looking at the breakdown of the 14 conspiracy theories gives some further insights.

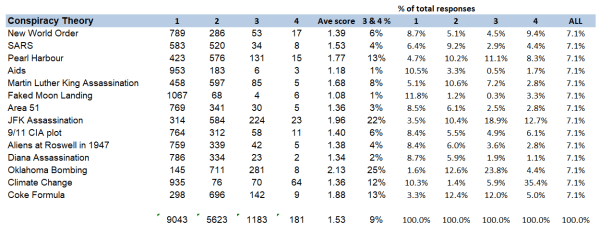

Figure 4 : Analysis of 14 published conspiracy theories using the “published” data

The full title of the “Moon Hoax” paper is

NASA faked the moon landing—Therefore (Climate) Science is a Hoax: An Anatomy of the Motivated Rejection of Science

The title is ill-chosen given that the average score of 1.08 is the lowest of all conspiracy theories, with just 10 out of 1145 expressing any agreement and 93.2% expressing strong disagreement. Even worse, the title infers a chain of thought by a small minority of 3 from among hundreds of other potential combinations, without asking any questions of the respondents. Five years ago I looked at this in detail in “Lewandowsky’s false inference from an absurd correlation”.

There are just two conspiracy theories where acceptance is over one fifth of the total responses – the JFK Assassination and the Oklahoma Bombing.

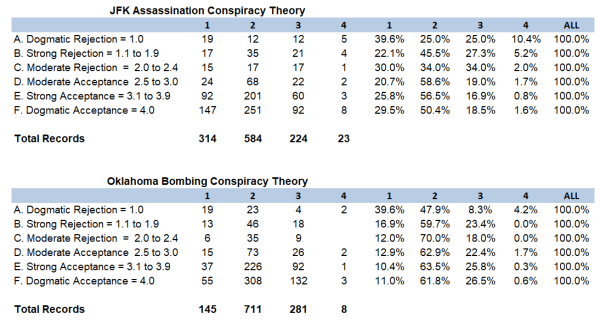

Figure 5: Analysis of the results from CYJFK and CYOKL.

The questions were

The assassination of John F Kennedy was not committed by the lone gunman, Lee Harvey Oswald, but was rather a detailed, organized conspiracy to kill the President.

The Oklahoma City Bombers, Timothy McVeigh and Terry Nicols did not act alone but rather received assistance from Neo Nazi groups.

From Figure 5 both of these, better known, conspiracy theories, strong rejection is related to the rejection of CO2 Science, whilst weaker rejections is related to rejection of CO2 Science. That is the very opposite of the average of 14 conspiracy theories. The dogmatic rejection of conspiracy theories appears to be related to the degree of climate scepticism, but a less strong rejection (i.e. a more sceptical stance) appears to be related to degree of belief in climate alarmism.

With a larger sample of those expressing belief in conspiracy theories there are contradictory results. For moderate acceptance, belief is related to degree of climate scepticism, for CYJFK and degree of belief in climate alarmism for CYOKL. Although the responses are much smaller in number, similar results are present for strong acceptance of the conspiracies if the two scam responses 860 & 889 are removed. This is consistent with the JFK conspiracy being more appealing to conservatives, whilst the Oklahoma Bombing conspiracy being more appealing to (US) liberals.

The 12 Less Popular Conspiracy Theories

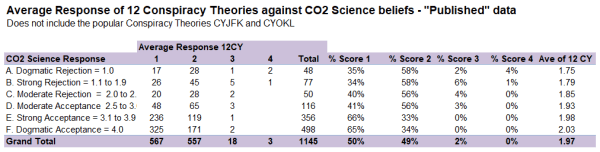

Figure 6 : The Average Response of the 12 less popular conspiracy theories

The element that has not changed is the average conspiracy score.

Compared to the “Ave of 14 CY” in figure 2 there is very little difference with the “Ave of 12CY” end column in Figure 6. But the impact of removing the two most popular conspiracy theories amplifies the features in Figure 2. The stronger the acceptance of climate “science” the greater the propensity to strongly reject a conspiracy theory, whilst the stronger the rejection of climate “science” the greater the propensity to less strongly reject – or to be sceptical about – a conspiracy theory,

Conclusions and further thoughts

There are three major findings.

First is in the analysis of the Iraq War conspiracy theory question. This conspiracy theory was not included in either the pre-publication or final published versions of the paper. Nor were the responses included in the “published” data file that has been available since August 2012. There are mixed messages in the responses when related to the belief in CO2 science. The stand out finding is that strong acceptance of “The Iraq War in 2003 was launched for reasons other than to remove WMD from Iraq” appears to be related to belief in CO2 Science. This should not be a surprise, as the Iraq War was the responsibility of Republican President George W Bush, whilst the survey, conducted on very climate alarmist blogs, shows strong belief in CO2 Science is very closely related to extreme socialist-environmentalist ideation.

Second is a new finding from reanalysis of the data using pivot tables. There is no significant linear relationship between belief in conspiracy theories and degree of acceptance or rejection of CO2 science.

Third, and deriving from the second point, the “Moon Hoax” data indicates about important differences in handling new controversial claims between acceptors and rejecters of climate science. The greater propensity of the rejecters of climate science to only moderately reject conspiracy theories, in the “Moon Hoax” paper was put down to conspiracy ideation, a form of faulty thinking. The data indicates something radically different. When confronted with conspiracy theories for which there is little prior knowledge, the degree to which CO2 science is rejected indicates the likelihood of expressing moderate disagreement with the conspiracy theory. Conversely, the degree of expressed belief in CO2 science indicates the likelihood of immediately rejecting the conspiracy theory. But when confronted with conspiracy theories where there is broad knowledge, the likelihood of some agreement appears to be related to ideological views.

This finding can be put in slightly different language. The degree to which respondents “deny” CO2 science indicates the degree to which they will be sceptical of unfamiliar dogmatic proclamations thrust at them. Conversely, the degree to which respondents express belief in CO2 science indicates the degree to which they will be reject out of hand unfamiliar dogmatic proclamations thrust at them that do not accord with their world view.

Traditionally academic study in the quasi-sciences, along with non-sciences such as history and theology, involved carefully evaluation of the evidence and the differing arguments to reach conclusions. In climate “science” such a sceptical approach is evidence of climate denial. It follows from this consensus science logic that “correct” thinking is achieved by filtering experience through the dominant dogmas or mantras.

As a final point, the conclusions I derive are through analysing the data in different ways using pivot tables. It suggests that responses are not linear, but based on different approaches to processing data. The “Moon Hoax” paper takes a view analogous to that taken by the authorities in Soviet Union. Lack of complete agreement with authority is evidence of denial. Not accepting consensus dogma due to it conflicting with one’s perceptions is inconceivable to members of that consensus, so must be the consequence of receiving misinformation, or being psychologically deficient.

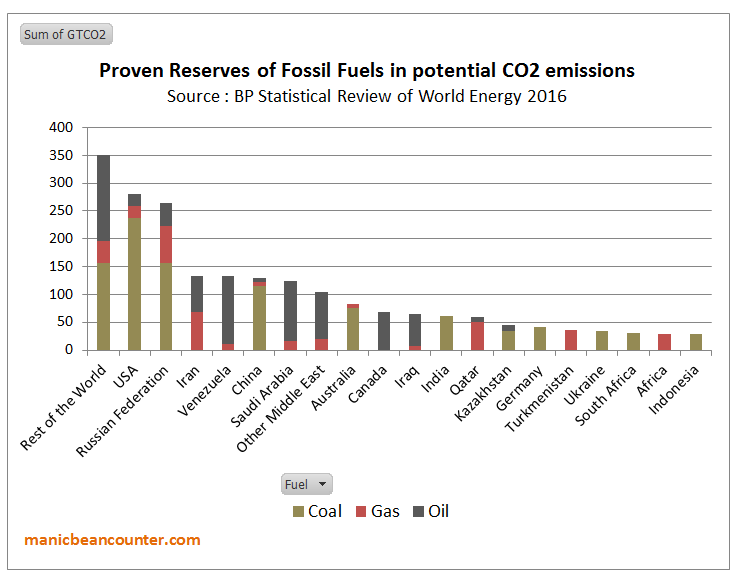

Figure 4 : Fossil fuel Reserves by country, expressed in terms of potential CO2 Emissions

Figure 4 : Fossil fuel Reserves by country, expressed in terms of potential CO2 Emissions